Google Gemma 3: The Future of AI Deployment on GPUs & TPUs

Gemma 3 is a groundbreaking addition to the Gemma family of open AI models, designed to make AI more accessible. Over the past year, the Gemma ecosystem has grown significantly, with more than 100 million downloads and over 60,000 unique model variants developed by the community. This “Gemmaverse” continues to drive AI innovation forward.

Built on the same research foundation as Gemini 2.0, Gemma 3 introduces a collection of lightweight, high-performance open models optimized for speed, efficiency, and responsible AI development. Designed to run on a variety of devices—from smartphones and laptops to high-powered workstations—Gemma 3 provides developers with unmatched flexibility. Available in multiple sizes (1B, 4B, 12B, and 27B), it allows users to select the best model for their hardware and performance needs.

This article explores the capabilities of Gemma-3, introduces ShieldGemma 2, and highlights how developers can contribute to the expanding Gemmaverse.

Why Gemma 3 Stands Out, Key Features

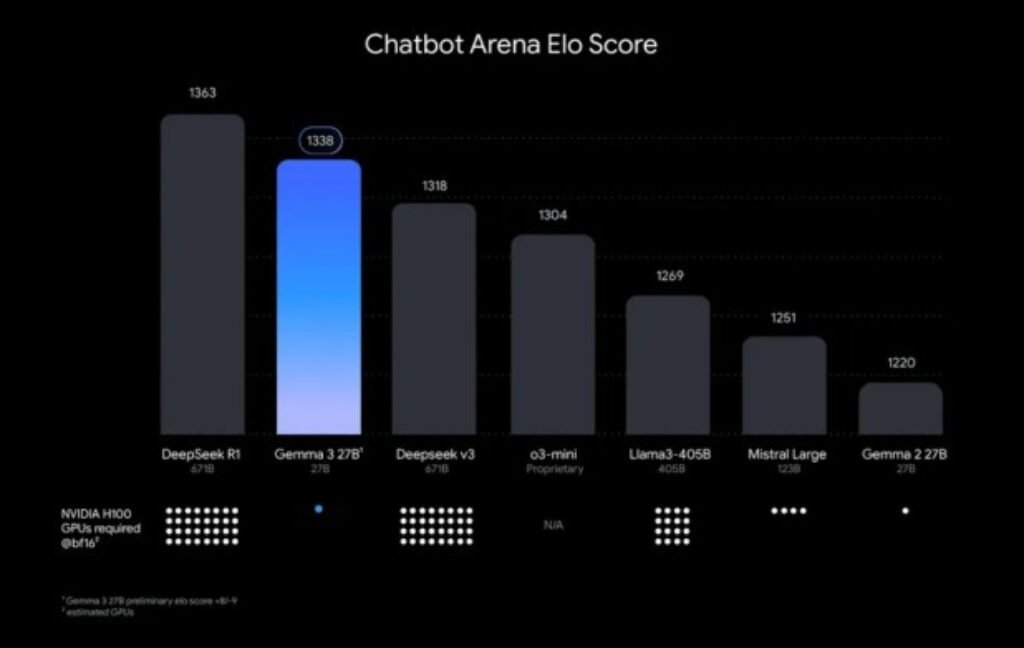

Optimized for Single-Accelerator Performance: Gemma delivers state-of-the-art performance for its size, surpassing Llama-405B, DeepSeek-V3, and o3-mini in preliminary evaluations. It is designed to run efficiently on a single GPU or TPU.

Multilingual Support for Global Reach: With native support for 35+ languages and pre-trained capabilities for over 140 languages, Gemma 3 enables developers to build AI applications for diverse audiences.

Advanced Text and Visual Reasoning: The model supports text, image, and short video analysis, unlocking new possibilities for interactive AI applications.

Expanded Context Window: A 128k-token context window allows AI applications to process and understand extensive information efficiently.

Function Calling for Automated Workflows: Structured outputs and function-calling capabilities enable intelligent automation and AI-driven workflows.

High-Performance Quantized Models: Official quantized versions of Gemma reduce model size and computational overhead while maintaining accuracy.

Building AI Responsibly: Rigorous Safety Protocols

Open AI models require thorough risk assessments, and Gemma 3 follows strict safety policies. Extensive data governance, fine-tuning, and benchmark evaluations ensure responsible AI deployment.

With its enhanced STEM capabilities, Gemma 3 underwent specialized evaluations to assess potential misuse in generating harmful substances. These assessments indicated a low risk level, reinforcing the model’s commitment to safe AI development. As AI progresses, continuous refinements to safety practices remain a priority.

Stay Updated & Subscribe Now!

Introducing ShieldGemma 2: Built-in AI Safety for Images

Alongside Gemma 3, ShieldGemma 2 launches as a robust 4B image safety model designed to enhance responsible AI use. ShieldGemma 2 classifies images into three key safety categories:

Dangerous content

Sexually explicit material

Violence

Developers can customize ShieldGemma 2 to meet specific safety needs, leveraging Gemma 3’s architecture for built-in content moderation.

Seamless Integration with Developer Tools

Gemma 3 and ShieldGemma 2 integrate smoothly into development workflows. Compatibility includes Hugging Face Transformers, Ollama, JAX, PyTorch, Google AI Edge, UnSloth, vLLM, and Gemma.cpp.

Start Building in Seconds: Instantly access Gemma 3 via Google AI Studio, or download models from Hugging Face, Kaggle, or Ollama.

Fine-Tune with Ease: Customize Gemma 3 using fine-tuning recipes on platforms like Google Colab, Vertex AI, or local GPUs.

Deploy Across Multiple Environments: Supported on Vertex AI, Cloud Run, Google GenAI API, and local setups for flexible deployment.

Optimized for GPUs and TPUs: NVIDIA optimizations ensure Gemma 3 runs efficiently across hardware, from Jetson Nano to Blackwell chips, as well as Google Cloud TPUs.

The Expanding Gemmaverse: A Community-Driven Ecosystem

The Gemmaverse fosters AI innovation through community contributions. Notable projects include:

AI Singapore’s SEA-LION v3: Advancing multilingual AI for Southeast Asia.

INSAIT’s BgGPT: Bulgaria’s first large-scale language model.

Nexa AI’s OmniAudio: Powering on-device AI audio processing.

To support AI research, the Gemma-3 Academic Program offers Google Cloud credits worth $10,000 per award. Applications remain open for four weeks—apply today on the official website.

Get Started with Gemma 3

Gemma3 marks a significant step in democratizing AI access. Developers can begin using it immediately:

Explore Instantly

Try Gemma 3 in the browser via Google AI Studio

Obtain an API key and start building with Google GenAI SDK

Customize & Build

Download Gemma 3 from Hugging Face, Ollama, or Kaggle

Fine-tune with Hugging Face Transformers or other tools

Deploy & Scale

Scale deployments with Vertex AI

Run inference on Cloud Run with Ollama

As AI technology advances, Gemma 3 provides the tools needed to build, customize, and deploy cutting-edge AI applications efficiently and responsibly.