Alibaba's R1-Omni: Redefining AI-Powered Emotion Recognition with Reinforcement Learning

Alibaba Group has made a significant leap in artificial intelligence with the release of R1-Omni, an advanced multimodal AI model designed for emotion recognition. Developed by Tongyi Lab, R1-Omni stands out as the first large-scale AI system to incorporate Reinforcement Learning with Verifiable Rewards (RLVR)—a groundbreaking approach that enhances both accuracy and transparency in AI-driven emotional analysis.

As Alibaba strengthens its position in the AI race against competitors like OpenAI and DeepSeek, R1-Omni emerges as a key innovation in multimodal AI, seamlessly integrating audio and visual data for superior human emotion detection.

Key Features of R1-Omni

1. Multimodal Emotion Recognition

Unlike traditional AI models that rely on text-based inputs, R1-Omni leverages video frames and audio streams to interpret human emotions with high accuracy. This allows for deeper contextual understanding, enabling applications in mental health, customer service, and human-computer interaction.

2. Reinforcement Learning with Verifiable Rewards (RLVR)

R1-Omni is the first AI model to apply RLVR to emotion recognition, introducing an advanced training method that:

- Improves prediction accuracy by optimizing reward functions.

- Enhances AI transparency, showing how audio and visual inputs influence decision-making.

- Reduces bias, ensuring ethical AI-driven analysis.

3. Enhanced Generalization Capabilities

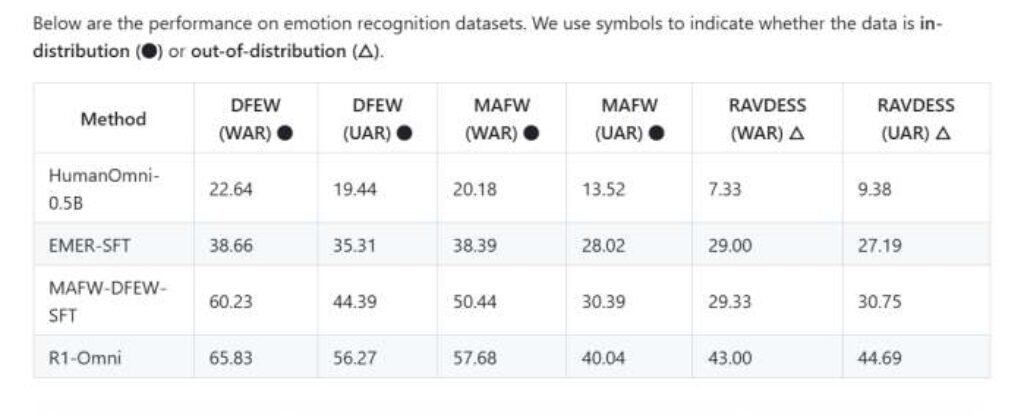

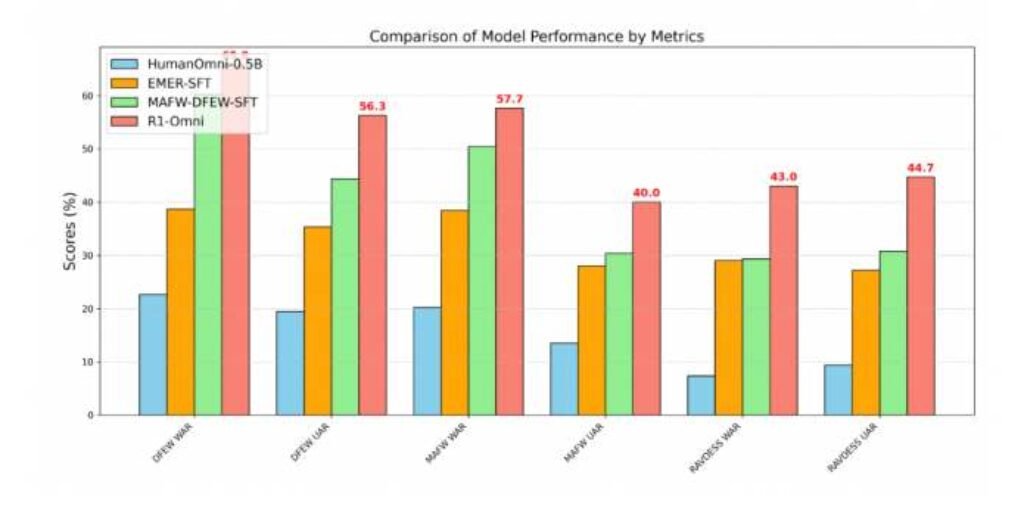

R1-Omni significantly outperforms traditional supervised fine-tuning models in emotion recognition, especially in out-of-distribution scenarios.

- 35% improvement over baseline models.

- 13% boost in generalization across diverse datasets.

- Superior weighted and unweighted average recall (WAR & UAR) in emotional classification tasks.

4. Industry-leading transparency in AI Decision-Making

One of the most innovative aspects of R1-Omni is its ability to provide clear, structured reasoning behind its decisions. This level of transparency allows developers and researchers to track how AI arrives at conclusions, a feature crucial for trustworthy AI deployment.

Why Alibaba's R1-Omni is a Game-Changer

A Strategic Move in the AI Race

Alibaba’s unveiling of R1-Omni follows the January 2025 launch of DeepSeek, a competing AI model that has gained traction in the market. By open-sourcing R1-Omni, Alibaba aims to democratize AI access, contrasting sharply with OpenAI’s subscription-based model pricing for GPT-4.5.

Furthermore, Alibaba has formed a strategic partnership with Apple, signaling a long-term vision for integrating AI into consumer technologies.

Practical Applications of R1-Omni

R1-Omni has vast potential across multiple industries:

✅ Mental Health & Therapy: AI-assisted emotional support for mental wellness.

✅ Human-Computer Interaction: Personalized AI responses based on user emotions.

✅ Customer Service & Sentiment Analysis: Enhanced chatbot and call center automation.

✅ Security & Surveillance: Real-time emotional analysis for safety monitoring.

Stay Updated & Subscribe Now!

How to Access Alibaba's R1-Omni

Alibaba has made R1-Omni available to developers and researchers through multiple channels:

- GitHub Repository: HumanMLLM/R1-Omni

- Model Weights on ModelScope: iic/R1-Omni-0.5B

- Research Paper on arXiv: Read the full technical breakdown

Final Thoughts: The Future of Multimodal AI

With R1-Omni, Alibaba has set a new standard for emotion recognition AI, leveraging reinforcement learning to create a model that is more accurate, transparent, and ethically responsible. As AI technology continues to evolve, multimodal models like R1-Omni will play a crucial role in bridging the gap between AI and human emotional intelligence.

Whether you’re a researcher, developer, or business owner looking to integrate cutting-edge AI capabilities, R1-Omni provides an open-source, high-performance solution that redefines the future of emotion-aware AI.

Get Started Today!

Download R1-Omni and explore its potential—the future of AI-driven emotion recognition is here.